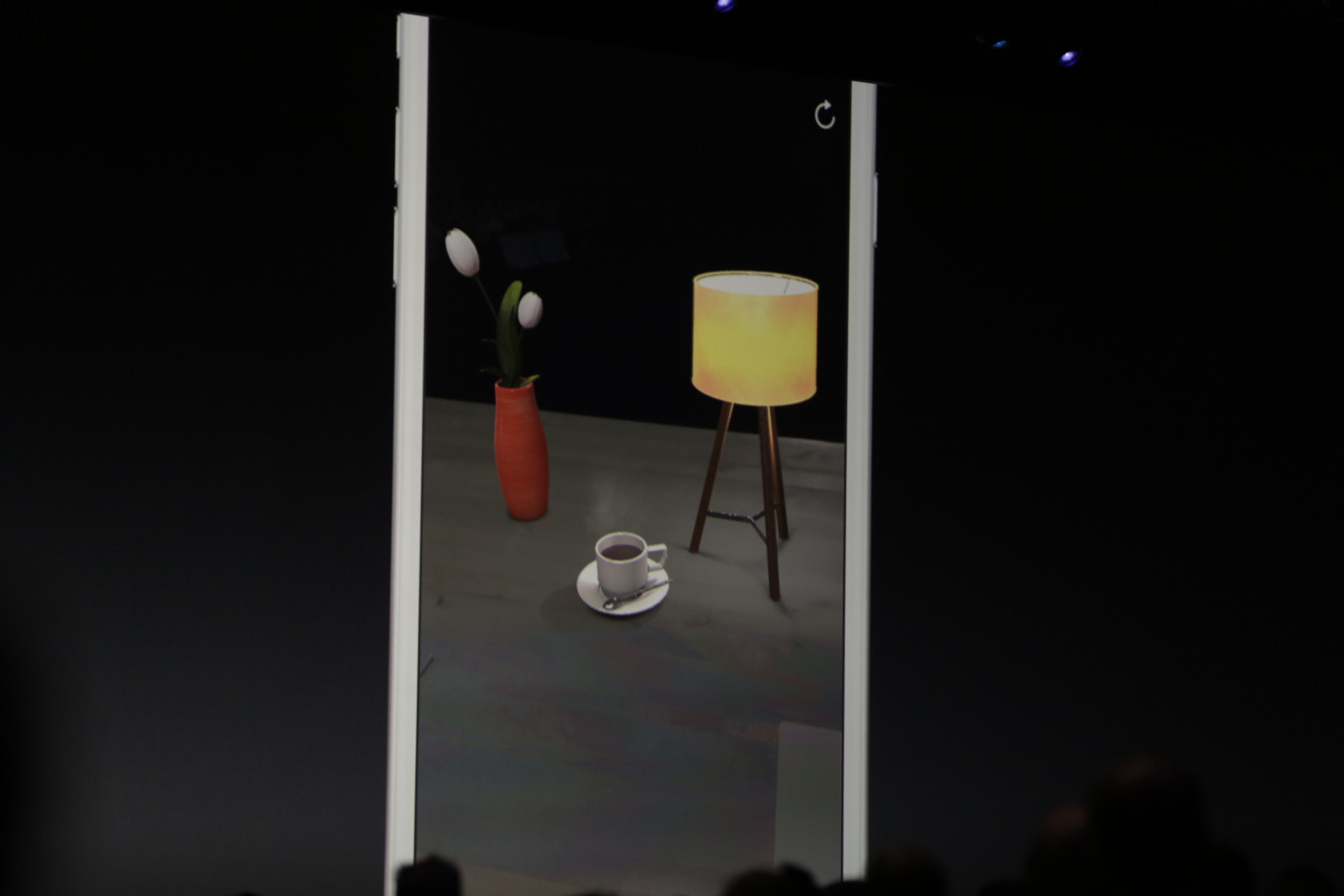

ARKit is the “largest AR platform in the world” according to Apple’s latest keynote address from WWDC 2017. So what can it do? Well, as we saw in the demos, ARKit enabled features for surface tracking, depth perception, and integrated with existing libraries from the game development world. Note: If you’re here to learn how to make ARKit apps, I’ve got a detailed tutorial over here: ARKit Tutorial.

Most significantly, Apple showed that both the Unreal and Unity game engines will integrate with ARKit for support for Pokemon Go-esque apps. But that’s not to mean that these engines will not run low-end casino and word games. Yes, you can still guess the Wordscape answers successfully in your device running these engines. Multiple objects can interact with each other casting shadows with each other, and even having physics reactions. Some say this development means Apple will soon be releasing a Hololens style device. I personally think they just want to enable better experiences like what Snapchat offers today.

On the other hand, ARKit will also give developers of photo editing apps a better way to dive deep in to the contents of user’s photos in orer to enable new and improved photo editing experiences. Deep learning powered photo editing will be sure to make mobile photo (and video) editing a much better experience.

ARKit has support for:

Fast, stable motion tracking

Plane estimation with basic boundaries

Ambient lighting estimation

Scale estimation

Support for Unity, Unreal, SceneKit

Xcode app templates