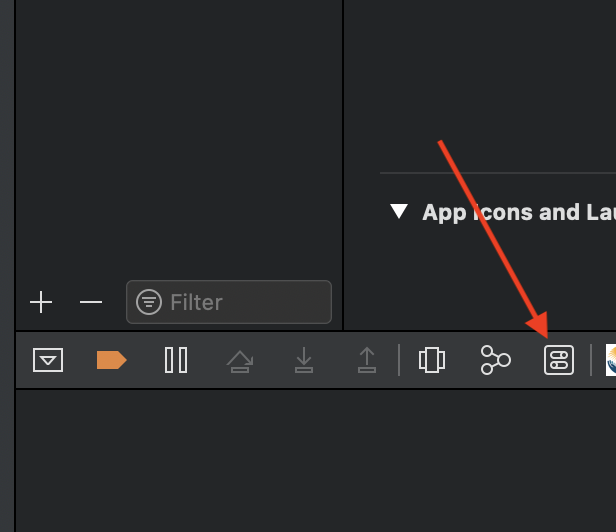

Trying out an old iOS App of mine I discovered that some of the buttons weren’t visible in dark mode. So, in this tutorial, with a little extra time I’ve found for reasons that should be quite obvious, I’ll share how to update the UI of your app for night mode. Step 1. Finding the…

ARKit Tutorial in Swift 4 for Xcode 9 using SceneKit

ARKit Tutorial in Swift 4 for Xcode 9 In this tutorial I’m going to show you how to work with ARKit, the new Framework from Apple that allows us to easily create Augmented Reality experiences in our iOS apps. The first thing to know about ARKit is that it can be used in three major…

Core NFC Tutorial for NFC on iOS Devices

With the release of iOS 11, for the first time third-party developers are able to use the NFC reader on devices iPhone 7 and higher. This could be used for passing along identification information and a whole host of other data exchange applications from door locks to subway passes. The technology used on iOS 11…

ARKit on iOS 11

ARKit is the “largest AR platform in the world” according to Apple’s latest keynote address from WWDC 2017. So what can it do? Well, as we saw in the demos, ARKit enabled features for surface tracking, depth perception, and integrated with existing libraries from the game development world. Note: If you’re here to learn how…

Core ML for iOS Apps in iOS 11

Core ML, announced at WWDC 2017 is a new set of APIs built by Apple for use with iOS 11 or higher devices. With Core ML, developers can incorporate machine learning models in to their mobile apps, and have the inference accelerated using the Metal APIs. This means the processing of models will be significantly…

Caffe2 on iOS – Deep Learning Tutorial

Caffe2 in an iOS App Deep Learning Tutorial At this years’s F8 conference, Facebook’s annual developer event, Facebook announced Caffe2 in collaboration with Nvidia. This framework gives developers yet another tool for building deep learning networks for machine learning. But I am super pumped about this one, because it is specifically designed to operate on…

Swift 3 Tutorial – Fundamentals

Swift 3 Tutorial In this Swift 3 tutorial, we’ll focus on how beginners may approach going from complete beginner to having a basic grasp on Swift, and we’ll be working with Swift 3. We chose to write this tutorial because newcomers will find many tutorials out there that are out of date, so it’s not…

Designing Animations with UIViewPropertyAnimator in iOS 10 and Swift 3

Designing Animations with UIViewPropertyAnimator in iOS 10 and Swift 3 This is part of a series of tutorials introducing new features in iOS 10, the Swift programming language, and the new XCode 8 beta, which were just announced at WWDC 16 UIKit in iOS 10 now has “new object-based, fully interactive and interruptible animation support…

SiriKit Resolutions with Swift 3 and iOS 10 – SiriKit Tutorial (Part 2)

SiriKit Resolutions with Swift 3 in iOS 10 – SiriKit Tutorial (Part 2) This tutorial written on June 20th, 2016 using the Xcode 8 Beta 1, and is using the Swift 3.0 toolchain. This post is a follow-up in a multi-part SiriKit tutorial. If you have not read part 1 yet, I recommend starting there….

Creating an iMessage Sticker App on iOS 10 with Swift – Tutorial (Part 1)

Creating iMessage Apps with XCode 8 This is part of a series of tutorials introducing new features in iOS 10, the Swift programming language, and the new XCode 8 beta, which were just announced at WWDC 16 Intro Among the most exciting additions in iOS 10 are some of the new app extension types, and…